Vendors integrations¶

A major motivation of the skpro project is a unified, domain-agnostic and approachable model assessment workflow. While many different packages solve the tasks of probabilistic modelling within the frequentist and Bayesian domain, it is hard to compare the models across the different packages in a consistent, meaningful and convenient way.

Therefore, skpro provides integrations of existing prediction algorithms from other frameworks to make them accessible to a fair and convenient model comparison workflow.

Currently, Bayesian methods are integrated via the predictive posterior samples they produce. Various adapter allow to transform these posterior samples into skpro’s unified distribution interface that offers easy access to essential properties of the predicted distributions.

PyMC3¶

The following example of a Bayesian Linear Regression demonstrates the PyMC3 integration. Crucially, the model definition method defines the shared y_pred variable that represent the employed model.

import pymc3 as pm

from skpro.metrics import log_loss

from skpro.base import BayesianVendorEstimator

from skpro.vendors.pymc import PymcInterface

from skpro.workflow.manager import DataManager

# Define the model using PyMC's syntax

def pymc_linear_regression(model, X, y):

"""Defines a linear regression model in PyMC

Parameters

----------

model: PyMC model

X: Features

y: Labels

The model must define a ``y_pred`` model variable that represents the prediction target

"""

with model:

# Priors

alpha = pm.Normal('alpha', mu=y.mean(), sd=10)

betas = pm.Normal('beta', mu=0, sd=10, shape=X.get_value(borrow=True).shape[1])

sigma = pm.HalfNormal('sigma', sd=1)

# Model (defines y_pred)

mu = alpha + pm.math.dot(betas, X.T)

y_pred = pm.Normal("y_pred", mu=mu, sd=sigma, observed=y)

# Plug the model definition into the PyMC interface

model = BayesianVendorEstimator(

model=PymcInterface(model_definition=pymc_linear_regression)

)

# Run prediction, print and plot the results

data = DataManager('boston')

y_pred = model.fit(data.X_train, data.y_train).predict(data.X_test)

print('Log loss: ', log_loss(data.y_test, y_pred, return_std=True))

>>> Log loss: (3.0523741768448449, 0.1443656210555945)

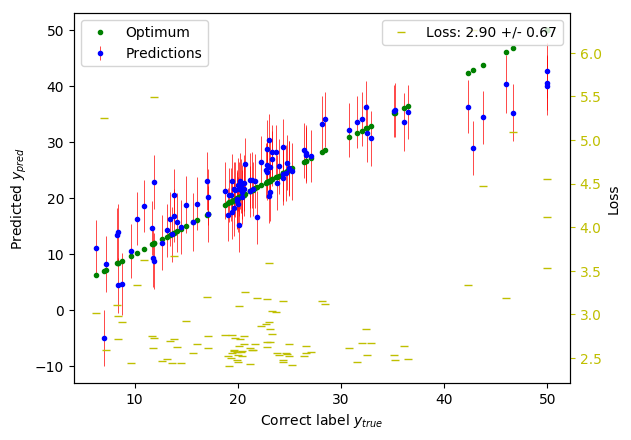

As usual, we can visualise the performance using the helper plot_performance(data.y_test, y_pred):

Please refer to PyMC3’s own project documentation to learn more about available PyMCs model definitions.

Integrate other models¶

skpro’s base classes provide scaffold to quickly integrate arbitrary models of the Bayesian or frequentist type. Please read the documentation on extension and model integration to learn more.